Trees Lecture Notes 1 Lesson 7 Trees 7 Introduction In Previous

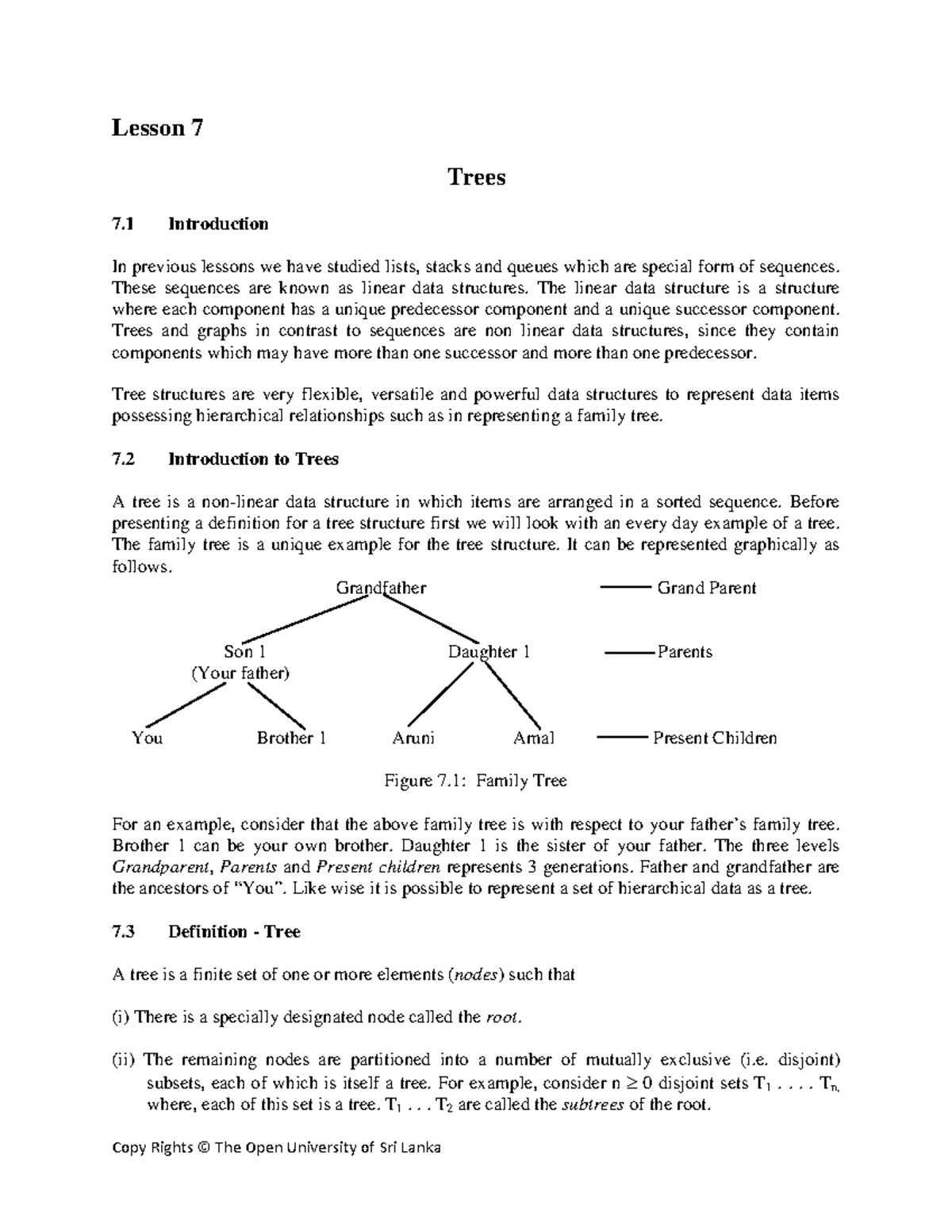

Trees Lecture Notes 1 Lesson 7 Trees 7 Introduction In Previous Lesson 7 trees. 7 introduction. in previous lessons we have studied lists, stacks and queues which are special form of sequences. these sequences are known as linear data structures. the linear data structure is a structure where each component has a unique predecessor component and a unique successor component. Csc148 lecture notes. see the lectures page on quercus for the readings assigned each week. you will eventually be responsible for all readings listed here, unless we clearly indicate otherwise. 1. recapping and extending some key prerequisite material. 1.1 the python memory model: introduction. 1.2 the python memory model: functions and.

Lecture Notes Tree Structures Notes Tree Structures Introduction Cs 486 686 lecture 7 1 learning goals by the end of the lecture, you should be able to describe the components of a decision tree. construct a decision tree given an order of testing the features. determine the prediction accuracy of a decision tree on a test set. compute the entropy of a probability distribution. Lecture 5: introduction to trees and binary trees joanna klukowska [email protected] 1 tree terminology a tree is a structure in which each node can have multiple successors (unlike the linear structures that we have been studying so far, in which each node always had at most one successor). 6 decision trees 6.1 introduction decision tree algorithms can be considered as iterative, top down construction method for the hypothesis (classi er). you can picture a decision tree as a hierarchy of deci sions, which are forking or dividing a dataset into subspaces. decision trees can represent any boolean (binary) function, and the. 1. pick an attribute to split at a non terminal node 2. split examples into groups based on attribute value 3. for each group: i if no examples – return majority from parent i else if all examples in same class – return class i else loop to step 1 zemel, urtasun, fidler (uoft) csc 411: 06 decision trees 28 39.

Comments are closed.