Foundation Models Transformers Bert And Gpt Niklas Heidloff

Foundation Models Transformers Bert And Gpt Niklas Heidloff Bert and gpt are both foundation models. let’s look at the definition and characteristics: pre trained on different types of unlabeled datasets (e.g., language and images) self supervised learning. generalized data representations which can be used in multiple downstream tasks (e.g., classification and generation) the transformer architecture. By niklas heidloff. 4 min read. foundation models are a game change and a disruptor for many industries. especially since chatgpt has been released, people realize a new era of ai has begun. in this blog i share my experience learning foundation models with a focus on capabilities that ibm provides for enterprise clints and partners.

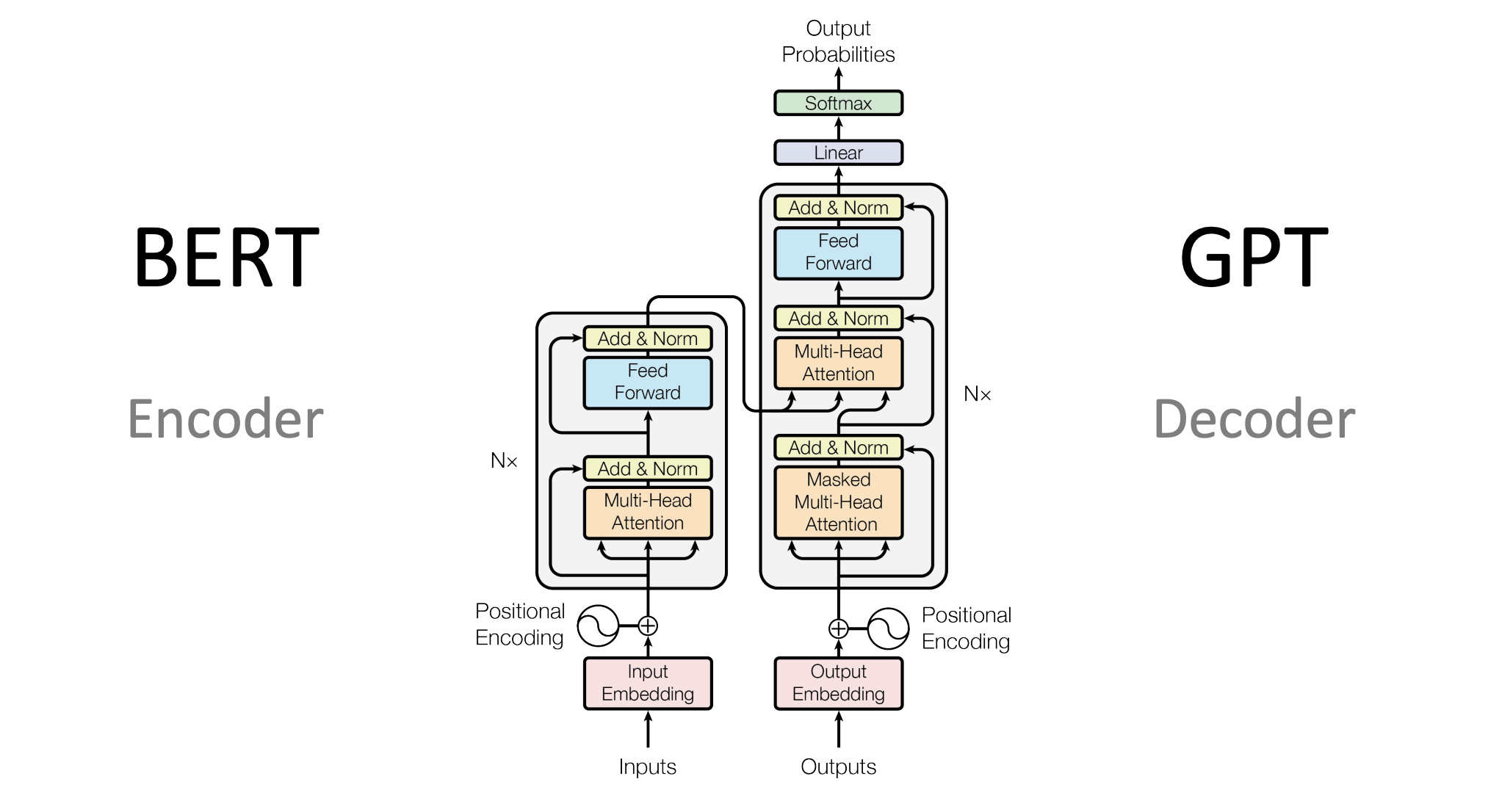

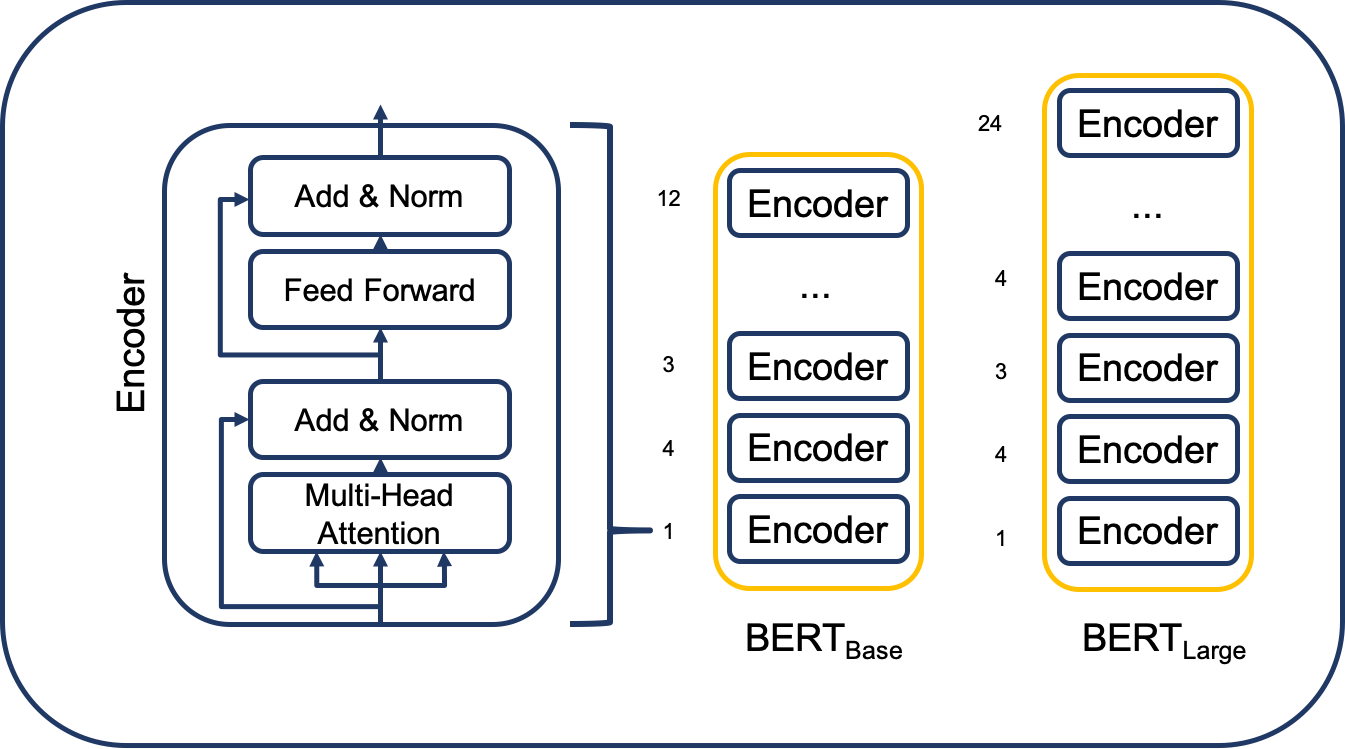

Comparison Of Bert Openai Gpt And Elmo Model Architec Vrogue Co Foundation models, transformers, bert and gpt since i’m excited by the incredible capabilities which technologies like chatgpt and bard provide, i’m trying to understand better how they work. this post summarizes my current understanding about. Foundation models are large scale ai systems trained on extensive datasets. they can perform a wide range of tasks with remarkable accuracy. unlike traditional ai models designed for specific tasks, foundation models have a broader scope and can adapt to numerous applications with minimal fine tuning. the significance of foundation models lies. Pretrained foundation models (pfms) are regarded as the foundation for various downstream tasks with different data modalities. a pfm (e.g., bert, chatgpt, and gpt 4) is trained on large scale data which provides a reasonable parameter initialization for a wide range of downstream applications. bert learns bidirectional encoder representations from transformers, which are trained on large. Foundation model. a foundation model, also known as large ai model, is a machine learning or deep learning model that is trained on broad data such that it can be applied across a wide range of use cases. [ 1 ] foundation models have transformed artificial intelligence (ai), powering prominent generative ai applications like chatgpt. [ 1 ].

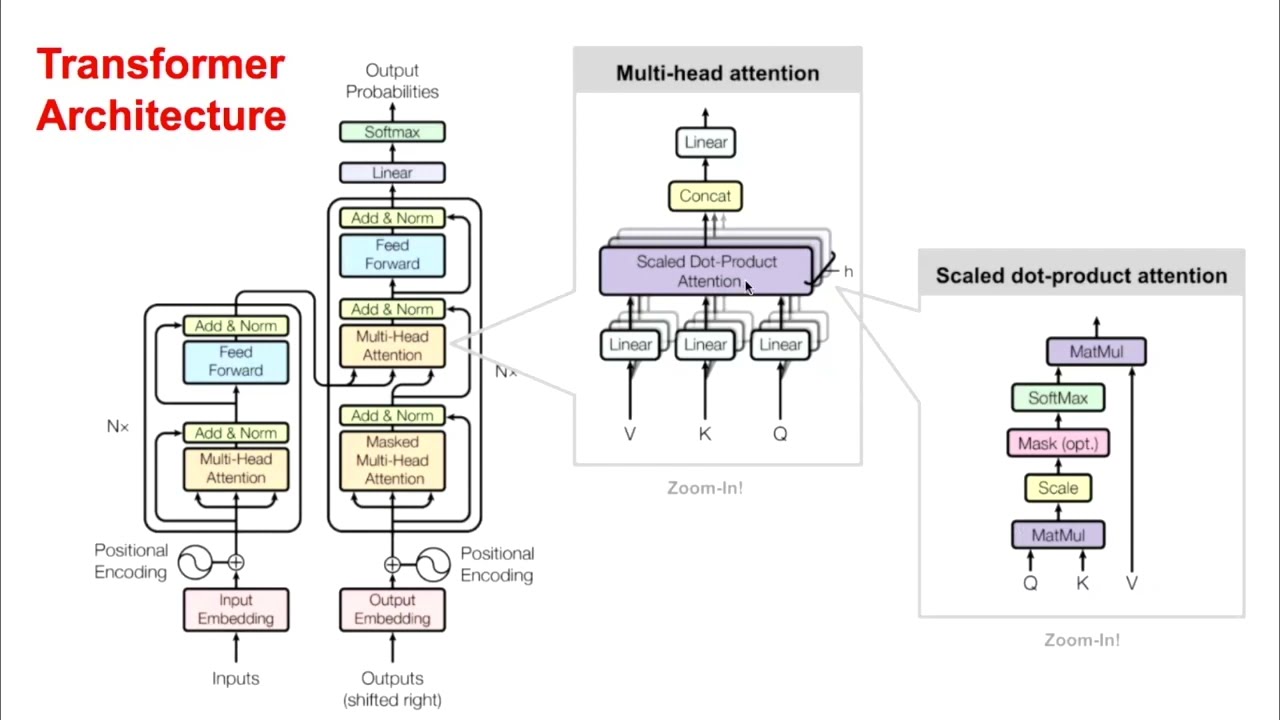

Understanding Foundation Models Niklas Heidloff Pretrained foundation models (pfms) are regarded as the foundation for various downstream tasks with different data modalities. a pfm (e.g., bert, chatgpt, and gpt 4) is trained on large scale data which provides a reasonable parameter initialization for a wide range of downstream applications. bert learns bidirectional encoder representations from transformers, which are trained on large. Foundation model. a foundation model, also known as large ai model, is a machine learning or deep learning model that is trained on broad data such that it can be applied across a wide range of use cases. [ 1 ] foundation models have transformed artificial intelligence (ai), powering prominent generative ai applications like chatgpt. [ 1 ]. Gpus, which power foundation models' chips, have significantly increased throughput and memory. transformer model architecture. transformers are the machine learning model architecture that powers many language models, such as bert and gpt 4. data availability. there is a lot of data for these models to train on and learn from. The rise of transformer based models like bert, gpt, and t5 has significantly impacted our daily lives. from the chatbots that assist us on websites to the voice assistants that answer our queries, these models play a pivotal role. their ability to understand and generate human language has opened doors to countless applications.

Bidirectional Encoder Representations From Transformers Bert Gpus, which power foundation models' chips, have significantly increased throughput and memory. transformer model architecture. transformers are the machine learning model architecture that powers many language models, such as bert and gpt 4. data availability. there is a lot of data for these models to train on and learn from. The rise of transformer based models like bert, gpt, and t5 has significantly impacted our daily lives. from the chatbots that assist us on websites to the voice assistants that answer our queries, these models play a pivotal role. their ability to understand and generate human language has opened doors to countless applications.

Introduction To Transformer Models For Nlp Using Bert Gpt And More

Comments are closed.