Confluent Consumer Configuration

Introducing The Kafka Consumer Getting Started With The New Apache This topic provides apache kafka® consumer configuration parameters. the configuration parameters are organized by order of importance, ranked from high to low. to learn more about consumers in kafka, see this free apache kafka 101 course. you can find code samples for the consumer in different languages in these guides. Kafka consumer group tool. kafka includes the kafka consumer groups command line utility to view and manage consumer groups, which is also provided with confluent platform. find the tool in the bin folder under your installation directory. you can also use the confluent cli to complete some of these tasks.

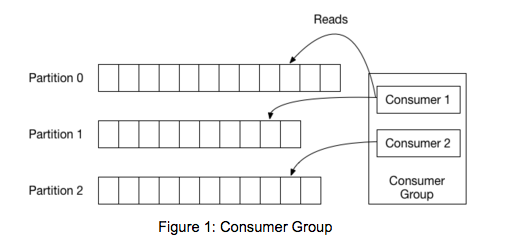

Avro Producer And Consumer With Python Using Confluent Kafka Stackstalk Apache kafka® configuration refers to the various settings and parameters that can be adjusted to optimize the performance, reliability, and security of a kafka cluster and its clients. kafka uses key value pairs in a property file format for configuration. these values can be supplied either from a file or programmatically. The consumer is constructed using a properties file just like the other kafka clients. in the example below, we provide the minimal configuration needed to use consumer groups. properties props = new properties(); props.put("bootstrap.servers", "localhost:9092"); props.put("group.id", "consumer tutorial");. Create new credentials for your kafka cluster and schema registry, writing in appropriate descriptions so that the keys are easy to find and delete later. the confluent cloud console will show a configuration similar to below with your new credentials automatically populated (make sure show api keys is checked). You can assign group ids via configuration when you create the consumer client. if there are four consumers with the same group id assigned to the same topic, they will all share the work of reading from the same topic. if there are eight partitions, each of those four consumers will be assigned two partitions.

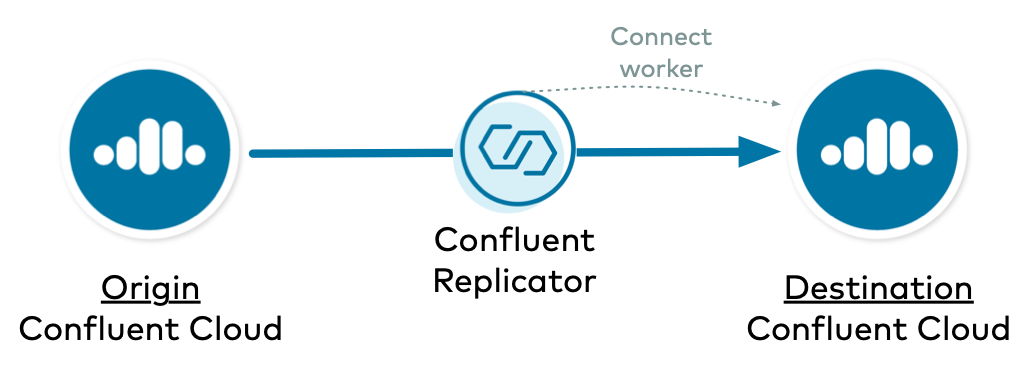

Confluent Replicator To Confluent Cloud Configurations Confluent Create new credentials for your kafka cluster and schema registry, writing in appropriate descriptions so that the keys are easy to find and delete later. the confluent cloud console will show a configuration similar to below with your new credentials automatically populated (make sure show api keys is checked). You can assign group ids via configuration when you create the consumer client. if there are four consumers with the same group id assigned to the same topic, they will all share the work of reading from the same topic. if there are eight partitions, each of those four consumers will be assigned two partitions. Confluent kafka consumer best practices. january 25, 2024. by limepoint engineering. confluent cloud. apache kafka is a robust and scalable platform for building a real time streaming platform. kafka’s consumer applications are critical components that enable organisations to consume, process, and analyze data in real time. This script was deliberately simple, but the steps of configuring your consumer, subscribing to a topic, and polling for events are common across all consumers. if you want more details on additional configuration changes that you may want to play around with in your consumer, feel free to check out the getting started guides on confluent.

Comments are closed.